Scraping Websites

Introduction

- Scraping is the process of traversing and collecting data from the web pages. People scrape websites for many reasons -- to get information about companies or fetch latest news or get stock prices informations or just create a dataset for the next big AI model

- In this article, we will be focusing on two different techniques to scrape website.

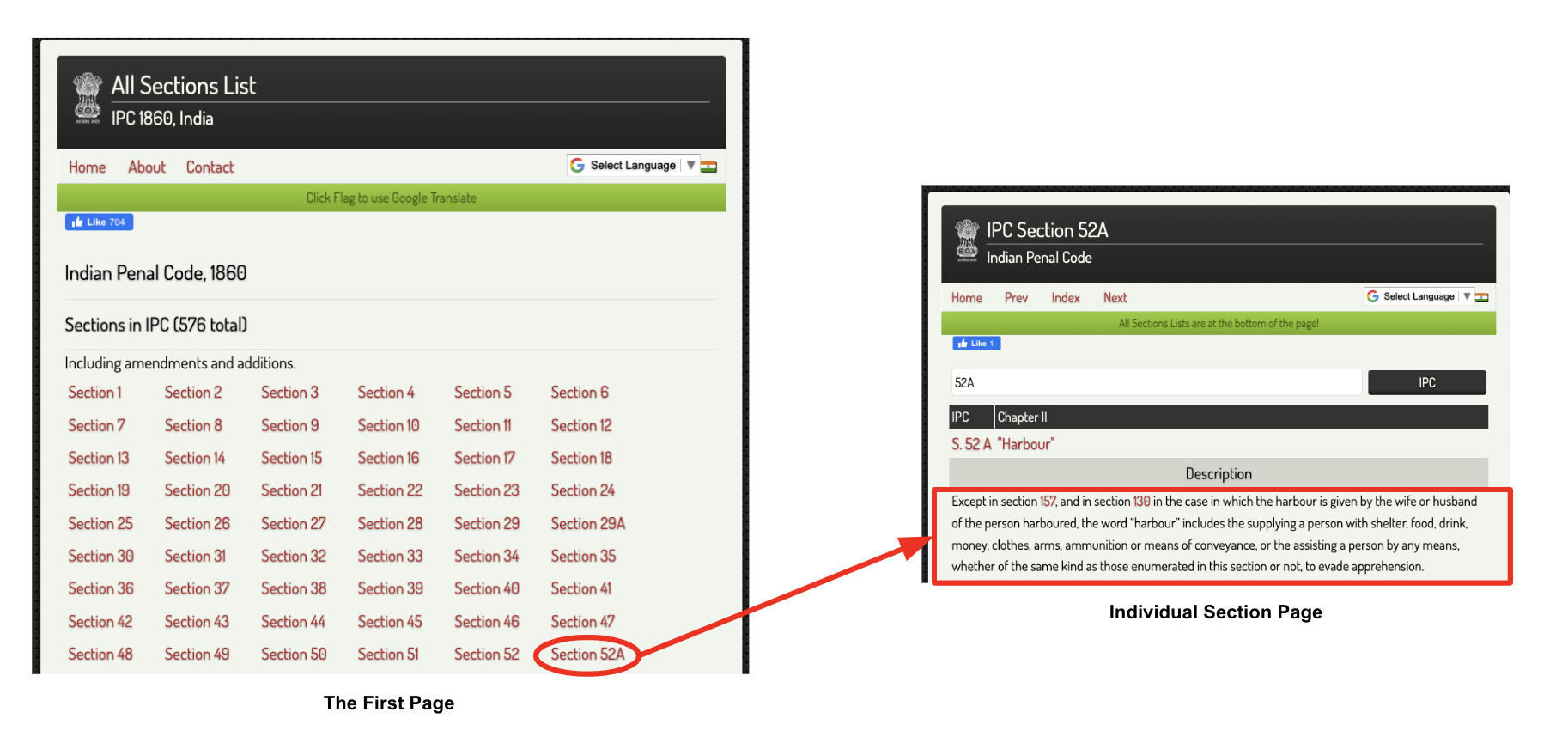

- For static website, we can use Scrapy. As an example, we will scrape data from the Devgan website that hosts details of difference sections in Indian Penal Code (IPC). [Github Code]

- For dynamic website, we can use Selenium in combination with BeautifulSoup4 (BS4). As an example, we will scrape data from Google search results. In short, Selenium is an open-source tool for browser automation and it will be used to automate the process of opening the browser and loading the website (as dynamic websites populates the data once the website is completely loaded). For extracting the data from the website, we will use BS4.

Warning

This article is purely for educational purpose. I would highly recommend considering website's Terms of Service (ToS) or getting website owner's permission before scraping.

Static Website scrape using Scrapy

Understanding the website

- Before we even start scraping, we need to understand the structure of the website. This is very important, as we want to (1) get an idea of what we want to scrapeand (2) where those data are located.

- Our goal is to scrapethe description for each section in IPC. As per the website flow above, the complete process can be divided into two parts,

- First, we need to traverse to the main page and extract the link of each sections.

- Next, we need to traverse to each individual section page and extract the description details present there.

Data extraction methods

- Now, let's also look into different methods exposed by Scrapy to extract data from the web pages. The basic idea is that scrapy downloads the web page source code in HTML and parse it using different parsers. For this, we can either use

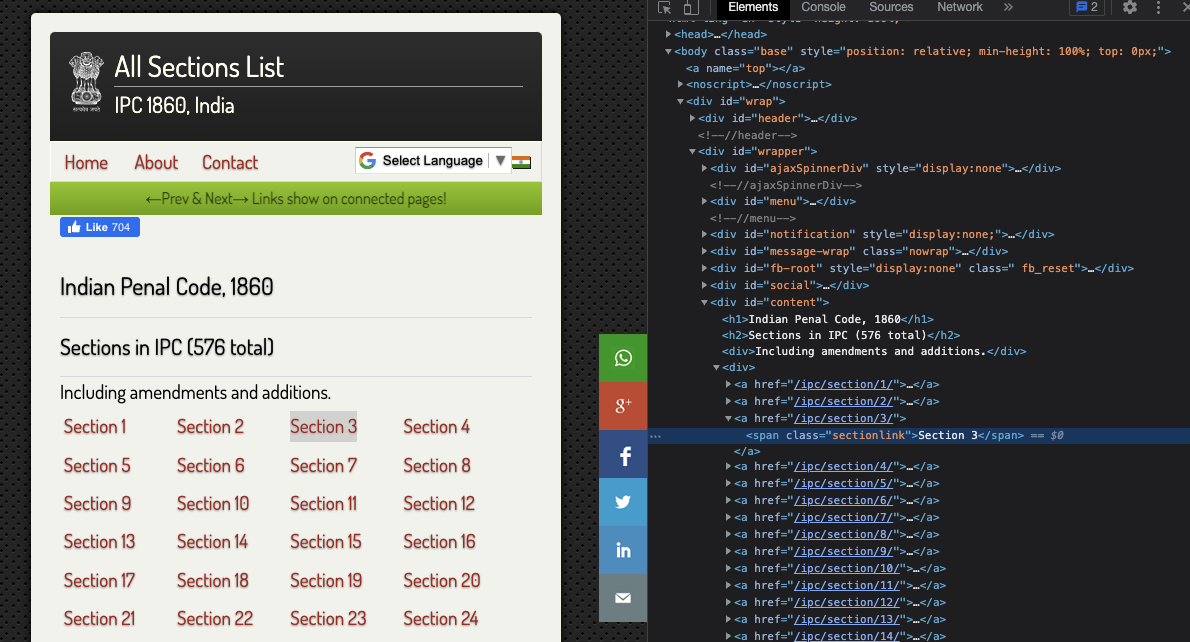

XPathsorCSSselectors. The choice is purely up to us. - We can begin with the main page and try to find the link of each section. For this, you can open inspect option from your browser by right clicking on any of the sections and select

inspect. This should show you the source code. Next, try to find the position of the tag where each section is defined. Refer the image below, and you can see that each section is within<a>tag inside the<div id="content">tag. Thehrefcomponent will give you the link of the section and the<span>tag inside gives the section name.

- To try out the extraction, we can utilize the interpreter functionality of Scapy. For that just activate your Scrapy VM and type

scrapy shell '{website-link}', here it will bescrapy shell http://devgan.in/all_sections_ipc.php. This opens a playground where we can play around withresponsevariable to experiment with different extraction queries. - To extract the individual sections we can use

CSSquery -response.css('div#content').css('a'). Note, here we define the{tag_type}#{id}as the first CSS query and then use anotherCSSquery -a. This will give us a list of all the<a>tags inside the<div id="content">tag. - Now from within section, to extract the title, we can use

CSSquery -section.css('span.sectionlink::text').extract(). For this to work, you should save the last query assectionvariable. - Similar approach can be applied to extract the description from the next page. Just re-run the shell with one of the section's link and try out building

CSSquery. Once you have all the queries ready, we can move on to the main coding part

Note

You can refer the Scrapy official doc for more details on creating CSS or XPath queries.

Setup Scrapy project

- First, let us install the Scapy package using pip. It can be easily done by running the following command in the terminal:

pip install scrapy. Do make sure to create your own virtual environment (VE), activate it and then install the package in that environment. For confusion regarding VE, refer my snippets on the same topic. - Next, let us setup the Scrapy project. Go to your directory of choice and run the command

scrapy startproject tutorial. This will create a project with folder structure as shown below. We can ignore most of the files created here, our main focus will be on thespiders/directory.

tutorial/

scrapy.cfg # deploy configuration file

tutorial/ # project's Python module, you'll import your code from here

__init__.py

items.py # project items definition file

middlewares.py # project middlewares file

pipelines.py # project pipelines file

settings.py # project settings file

spiders/ # a directory where you'll later put your spiders

__init__.py

Note

The above folder structure is taken from the Scrapy Official Tutorial

Create your Spider

- Usually we create one spider to scrapeone website. For this example we will do exactly the same for Devgan website. So let's create a spider

spiders/devgan.py. The code is shown below,

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | |

- Now lets us try to understand the code line by line,

Line 2:Importing thescrapypackage.Line 5:Defining the spider class that inherits thescrapy.Spiderclass.Line 6-7:We define the name of the spider and allow the spider to crawl the domaindevgan.in.Line 9-14:We define thestart_requestsmethod. This method is called when the spider is executed. It calls the scraping function for each url to scrap, it is done usingscrapy.Requestfunction call. For each url, the scraping function set withincallbackparameter is called.Line 16:We define the scraping functionparse_mainpagefor the main page. Note, this function receivesresponseas an argument, that contains the source code from the server for the main page url.Line 18-29:We start with identifying the links to the individual section pages, store them insections, and call the scraping functionparse_sectionfor each section. Before that, we also extract the title, link and section name from the main page using the queries we created before. One point to remember, this particular example is little complex as we want to traverse further inside into the section pages. For this, we again call thescrapy.Requestfor the individual section links. Finally, we want to pass the data collected form this page to the section page, as we will consolidate all data for individual section there and return it. For this, we usecb_kwargsparameter to pass the meta data to the next function.Line 31-35:We extract the description from the section page using theCSSquery. We add description detail to the metadata and return the complete data that is to be persisted.

Executing the spider

- To run the spider, traverse to the root directory and execure the following command,

scrapy crawl devgan -O sections_details.csv -t csv. Here,devganis the name of the spider we created earlier,-Ois used to set the output file name assections_details.csv.-tis used to define the output format ascsv. This will create the csv file with all details of the sections as separate columns as shown below (only 2 rows)

| title | link | section | description |

|---|---|---|---|

| IPC Section 1... | http://... | Section 1 | This Act shall be called the Indian Penal Code... |

| IPC Section 2... | http://... | Section 2 | Every person shall be liable to punishment un.... |

And that's it! Cheers!

Dynamic Website scrape using Selenium and BS4

Understanding the website

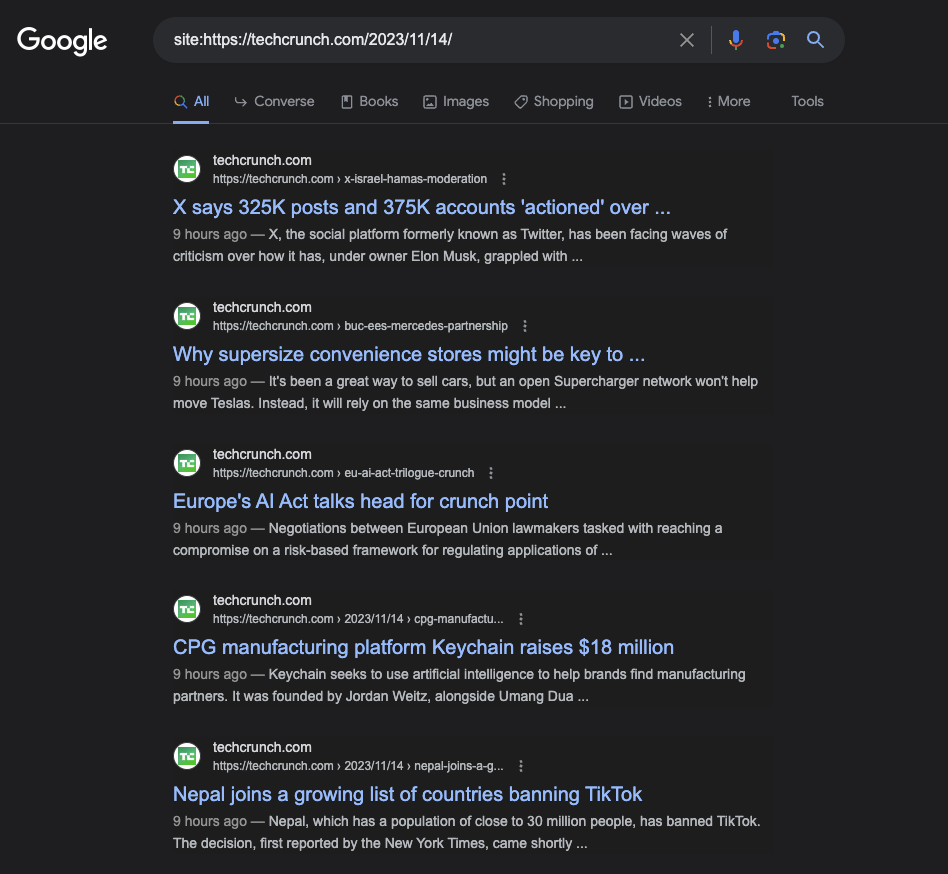

- Well, everyone knows and has used Google atleast once in their life. Nevertheless, for an example, if we want to find all of the latest news from TechCrunch, this is how the google search will look like.

- On looking at the page source of the above screen, you will only see javascript code that does not contain any data as shown above. This is because Google is a dynamic website which is event-driven and created with server-side languages. Because of this, we cannot use Scrapy alone as it cannot run Javascript, what we we need is a browser to run the code. That's where Selenium and BS4 comes in.

Selenium and BS4 Automation

- We will code a generic function to automate the process of opening Chrome browser, loading the website and extracting the data from the website. To run it for our example of TechCrunch, we just need to change the input param.

Note

Before starting make sure to install the Selenium package and drivers as explained here.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 | |

Let's understand the code in detail,

Line 1-6: Importing the required packages.Line 8-11: Initializes the Chrome WebDriver with the specified options.Line 14-40: Defines a function to scrape Google. It takes a search query, the number of pages to scrape, and an optional starting page. Inside the function, we iterate over the number of pages specified, constructing a URL for each page of Google search results based on the query and the current page. The WebDriver is used to navigate to the URL. Then, we use BS4 to parse the page source and extracts the title, link, and description of each search result and appends it to theresultslist. At each iteration, we save the result to a CSV. Finally, we close the driver and return theresults.Line 42-49: Definessave_results_to_csvfunction to save the scraped results to a CSV file. It uses Python'scsvmodule to write the title, link, and description of each search result to a CSV file.Line 52: We call thescrape_googlefunction to scrape first 10 pages of Google search results for the specified query.

And we are done!