GPT models

Introduction

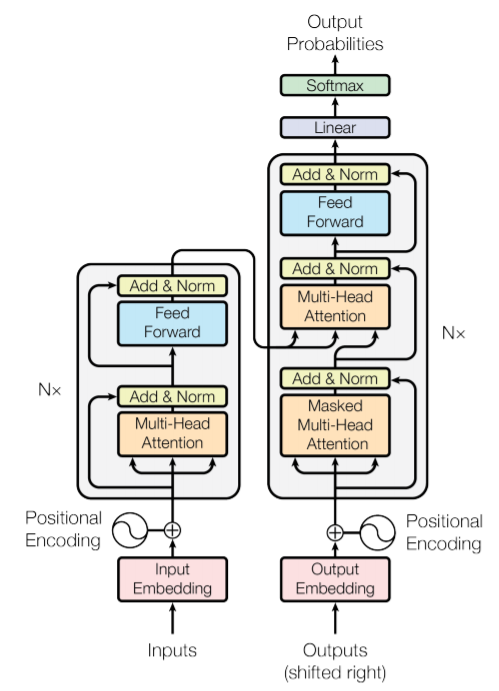

- GPT stands for "Generative Pre-trained Transformer". It is an autoregressive language model which is based on the decoder block of the Transformer architecture.

- The idea for the model is similar to any text generation model i.e. it takes some prompt as input and generates text as output. But the caveat is that, GPT model's tunable parameter ranges from 100 million to 175 billion, which leads to the model learning much more than basic language syntax information or word related contextual information. In fact, it has been shown that GPT models store additional real-world information as well, and there has been interesting recent research about how much knowledge you can pack into the parameters (roberts2020knowledge).

- GPT models are also famous because they can be easily applied to many downstream NLP tasks. This is because they have been shown to be very good in few-shot leaning, i.e. they are able to perform tasks for which they are not even trained by only providing a few examples!

- This lead to a new interesting paradigm of prompt engineering, where creating crisp prompt could lead to good results. This means that, instead of playing around with training, just by modifying the input prompt to the model, we can improve the accuracy. Ofcourse, for better accuracy, it is always preferred to fine-tune the model. Example of a sample prompt is provided below

## prompt input - this can be passed to a GPT based model

Below are some examples for sentiment detection of movie reviews.

Review: I am sad that the hero died.

Sentiment: Negative

Review: The ending was perfect.

Sentiment: Positive

Review: The plot was not so good!

Sentiment:

## The model should predict the sentiment for the last review.

Tip

Copy the prompt from above and try it @ GPT-Neo 2.7B model. You should get "Negative" as output! We just created a Sentiment detection module without a single training epoch!

Analysis

Comparing GPT models (basic details)

- There are two famous series of GPT models,

- GPT-{1,2,3}: the original series released by OpenAI, a San Francisco-based artificial intelligence research laboratory. It includes GPT-1 (radford2018improving, GPT-2 radford2019language, GPT-3 brown2020language)

- GPT-{Neo, J}: the open source series released by EleutherAI. For GPT-Neo, the architecture is quite similar to GPT-3, but training was done on The Pile, an 825 GB sized text dataset.

- Details of the models are as follows, (details)

| models | released by | year | open-source | model size |

|---|---|---|---|---|

| GPT-1 | OpenAI | 2018 | yes | 110M |

| GPT-2 | OpenAI | 2019 | yes | 117M, 345M, 774M, 1.5B |

| GPT-3 | OpenAI | 2020 | no | 175B |

| GPT-Neo | EleutherAI | 2021 | yes | 125M, 1.3B, 2.7B |

| GPT-J | EleutherAI | 2021 | yes | 6B |

Code

- The most recent open-source models from OpenAI and EleutherAI are GPT-2 and GPT-Neo, respectively. And as they share nearly the same architecture, the majority of the code for inference or training, or fine-tuning remains the same. Hence for brevity's sake, code for GPT-2 will be shared, but I will point out changes required to make it work for GPT-Neo model as well.

Inference of GPT-2 pre-trained model

- For a simple inference, we will load the pre-trained GPT-2 model and use it for a dummy sentiment detection task (using the prompt shared above).

- To make this code work for GPT-Neo,

- import

GPTNeoForCausalLMat line 2 - replace line 5 withmodel_name = "EleutherAI/gpt-neo-2.7B"(choose from any of the available sized models) - useGPTNeoForCausalLMin place ofGPT2LMHeadModelat line 9

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 | |

Note

As GPT2 is a language model style decoder with no special encoder block, the output contains the input plus additional generations. This can be observed from the above example. On the other hand, output of T5 model is pure new generations (without the repetition of input) as it has encoder-decoder architecture.

Finetuning GPT-2 (for sentiment classification)

- Tweet sentiment data can be downloaded from here

- We add the special tokens at line 72, so that the model learns the start and end of the prompt. This will be helpful later on during the testing phase, as we don't want the model to keep on writing the next word, but it should know when to stop the process. This can be done by setting the

eos_tokenand training the model to predict the same.

Note

Original GPT-2 paper and implementation uses <|endoftext|> as the eos_token and bos_token (beginning of sentence). We can do the same, but for clarity in the implementation, we can also use <|startoftext|> as bos_token special token.

- We also define how to process the training data inside

data_collatoron line 91. The first two elements within the collator areinput_ids- the tokenized prompt andattention_mask- a simple 1/0 vector which denote which part of the tokenized vector is prompt and which part is the padding. The last part is quite interesting, where we pass the input data as the label instead of just the sentiment labels. This is because we are training a language model, hence we want the model to learn the pattern of the prompt and not just sentiment class. In a sense, the model learns to predict the words of the input tweet + sentiment structured in the prompt, and in the process learn the sentiment detection task.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 | |

Inference of GPT-3

- Running GPT-3 model is super easy using the OpenAI python package. Here is how to do it,

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |

Finetuning GPT-3

- While GPT-3 is not open source, OpenAI has provided the paid option to finetune the model. At the time of writing, free credits were provided to new users -- so another reason to go and register now

- They expose several APIs to perform finetuning. In a sense they are doing most of the heavy lifting by making the finetuning process super easy.

- To begin with, make sure you have the OpenAI python library installed. Do it by

pip install openai. - Then make sure the data is in correct format. Basically you require a

.csvfile with atleast two columns -promptandcompletionwith the respective data. Ideally the documentation suggest to have atleast 100 examples. - Next, we will prepare a

jsonlfile using thecsvfile. OpenAI expects the data in this format. And they also expose an API to do so, just run the following in CLI.

1 | |

- Now we will upload the prepared data to the OpenAI Server. Do this by running following code in Python.

1 2 3 4 5 6 7 8 | |

- Now we will train the model. Do this by running following code in Python.

1 | |

- And there we go, the finetuning has started! You can monitor the progress by running

openai.FineTune.list(). The last entry will contain astatuskey that will change tosucceededwhen the finetuning is complete. Also, note down the model name from thefine_tuned_modelkey, you will need it later to access the trained model. Btw if you only want to print the last entry try this insteadopenai.FineTune.list()['data'][-1]. - After the finetuning is done, you can use the model as usual from the playground or API!

Tip

OpenAI provides multiple models (engines) with different accuracy and speed. These are Davinci, Curie, Babbadge and Ada - in the descending order of accuracy but increasing speed.

Additional materials

- The Illustrated GPT-2 (Visualizing Transformer Language Models) - Link