DeepWalk

- DeepWalk [1] is a technique to create semantic embeddings of the nodes of a graph. For this, DeepWalk employs Skip-gram based technique of Word2Vec (w2v). For context, w2v is a word embedding technique, where we learn to embed words into a vector representation.

- In Skip-gram word embedding training procedure, the complete problem is transformed as a classification problem. The neural network tries to answer which word is most probable to be found in the context of a given word. Given a sequence of words that "naturally" appears in some sentence, the input could be any middle word and output could be any of the surrounding words (within some window). The training is done by creating a 1-layer deep neural network where the input word is fed as one-hot encoding and output is softmax applied with intention of getting large value for context word.

Hint

For a brief introduction to Word2Vec, you can refer the respective page in the Lazy Data Science Guide.

- But how to create training data that captures the notion of context in graphs? While intuitively it seems easy to create training data for w2v by using plethora of text available online, how can we do something similar for a graph?

- As it happens, we can follow the same intuition for graphs by applying random walk technique. Here, we start from one node and randomly go to one of it's neighbors. We repeat this process \(L\) time which is the length of the random walk. After this, we restart the process again. If we do this for all nodes (and \(M\) times for each node) we have in some sense transformed the graph structure into a text like corpus used to train w2v where each word is a node and its context defines its neighbor.

Code

Author's Implementation

-

The DeepWalk authors provide a python implementation here. Installation details with other pre-requisite are provided in the readme (windows user be vary of some installation and execution issues). The CLI API exposes several algorithmic and optimization parameters like,

inputrequires the path of the input file which contains graph information. A graph can be stored in several formats, some of the famous (and supported by the code) are — adjacency list (node-all_neighbors list) and edge list (node-node pair which have an edge).number-walksThe number of random walks taken for each node.representation-sizethe dimension of final embedding of each node. Also the size of hidden layer in the skipgram model.walk-lengththe length of each random walk.window-sizethe context window size in the skipgram training.workersoptimization parameter defining number of independent process to spawn for the training.outputthe path to output embedding file.

- Authors have also provided example graphs, one of which is Karate club dataset. It's stored in the format of the adjacency list. Now let’s read the graph data and create node embeddings by,

deepwalk --input example_graphs/karate.adjlist --number-walks 10

--representation-size 64 --walk-length 40 --window-size 5

--workers 8 --output trained_model/karate.embeddings

- This performs start-to-end analysis by taking care of — loading the graph from the file, generating random walks, and finally training skip-gram model on the walk data. We can read the output embedding file which contains vector embedding for each node in the graph.

Karate Club Implementation

- A much simpler API is provided by python package called KarateClub [2]. To do the same set of actions, all we need to do is following.

1 2 3 4 5 6 7 8 9 10 11 | |

Note

The DeepWalk class also extends the same parameters exposed by the author's code and can be tweaked to do the desired experiment. Refer their docs for more details.

Analysis

Seeing DeepWalk in Action

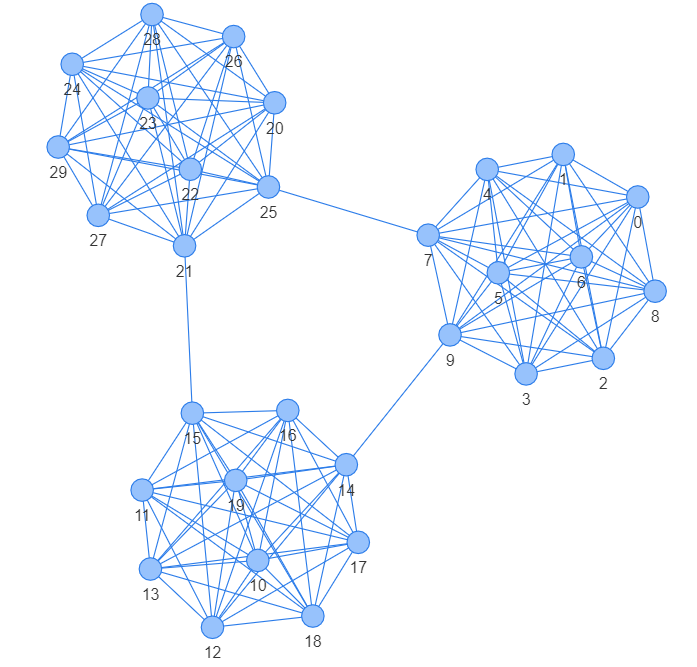

- To see DeepWalk in action, we will pick one graph and visualize the network as well as the final embeddings. For better understanding, I created a union of 3 complete graphs with some additional edges to connect each graph.

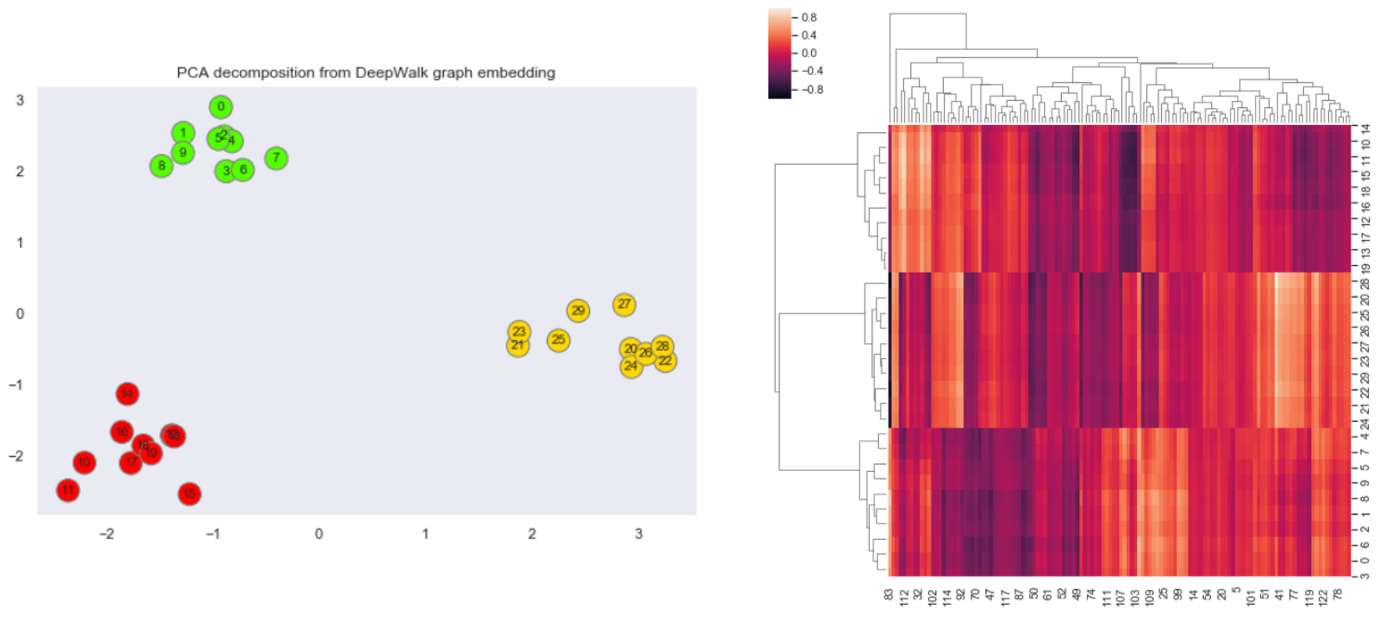

- Now, we will create DeepWalk embeddings of the graph. For this, we can use the KarateClub package and by running DeepWalk on default settings we get embeddings of 128 dimensions. To visualize this I use dimensionality reduction technique PCA, which scaled-down embeddings from R¹²⁸ to R². I will also plot the 128D heatmap of the embedding on the side.

- There is a clear segregation of nodes in the left chart which denotes the vector space of the embedding. This showcase how DeepWalk can transform a graph from force layout visualization to vector space visualization while maintaining some of the structural properties. The heatmap plot also hints to a clear segregation of graph into 3 clusters.

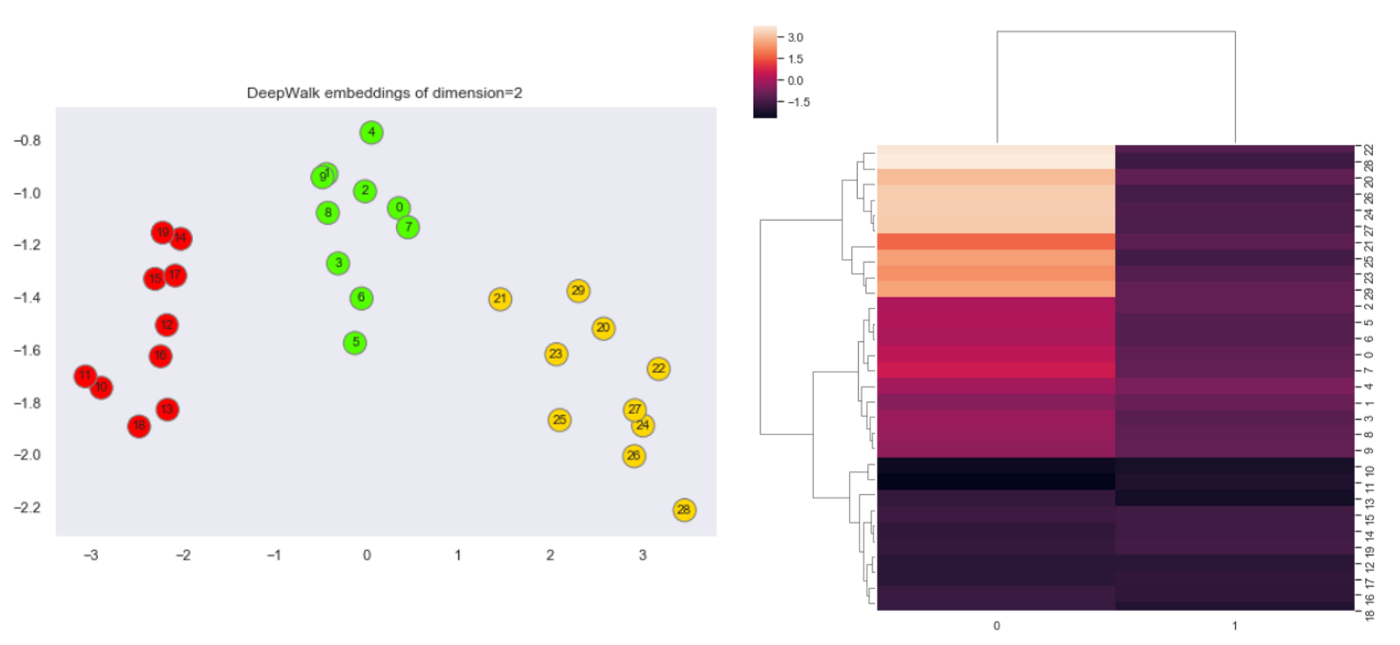

- Another important thing to note is when the graph is not so complex, we can get by with lower dimension embedding as well. This not only reduces the dimensions but also improves the optimization and convergence as there are fewer parameters in skip-gram to train. To prove this we will create embedding of only size 2. This can be done by setting the parameter in DeepWalk object

dw = DeepWalk(dimensions=2). We will again visualize the same plots.

- Both the plots again hint towards the same number of clusters in the graph, and all this by only using 1% of the previous dimensions (from 128 to 2 i.e. ~1%).

Conclusion

- As the answer to this analogy

NLP - word2vec + GraphNeuralNetworks = ?can arguably be DeepWalk (is it? 🙂 ), it leads to two interesting points,- DeepWalk's impact in GNN can be analogous to Word2Vec's in NLP. And it's true as DeepWalk was one of the first approaches to use NN for node embeddings. It was also a cool example of how some proven SOTA technique from another domain (here, NLP) can be ported to and applied in a completely different domain (here, graphs).

- As DeepWalk was published a while ago (in 2014 - less than a decade but a lifetime in AI research), currently, there are lots of other techniques which can be applied to do the job in a better way like Node2Vec or even Graph convolution networks like GraphSAGE, etc.

- That said, as to start learning Deep learning based NLP, word2vec is the best starting point, I think DeepWalk in the same is sense a good beginning for Deep Learning based graph analysis.

Cheers