Q-Learning

Introduction

- Q-learning is a RL algorithm that computes an expected reward for taking an action from any given state. The expected reward is a composition of the immediate reward and the future rewards possible from the new transitioned state. It is an iteration based algorithm that adjusts the scores over time, and given infinite exploration time it can identify an optimal action-selection policy.

Note

Q-learning does not requires to model the environment, hence it is model-free (check here for more details).

- To better grasp the intuition, let's understand it through a Grid world example before further complications creeps in.

The Beer game in Grid world

- Think of Grid World as a

nDworld (environment) where an agent will live and traverse. The environment consists of multiple cells, where each cell denotes one state, and it could deliver certain reward (positive or negative) to reach that state. The agent at any given time, will only have the knowledge of the current state and the information it gained in previous episodes. - Now imagine we drop an agent in a 2D grid world that contains a beer (that we want to find) and a pit (that we want to avoid). The agent traverse the world until it either finds the beer or drops into the pit. If we make the agent play this game 1000s of times, how should the agent behave overtime?

- The answer seems simple -- at first the agent will have no knowledge of the world, so it will stumble across the env, like a person performing coin toss to decide his fate

. Sometimes, it will find the beer or the pit, and at either points, it starts again.

- The interesting part is, after each episode the agent will become more and more aware of the world. And after many episodes it would have developed a detailed map, that guides to the beer with details of the areas you should not go to (as it contains the pit) 😎

Bellman's Equation

- The next question is how to capture the details of the map? That's where bellman's equation comes into the picture. Basically it learns Q (quality) scores, that represents expected reward, for taking an action \(a\) from a state \(s\). If we capture this for all the states and actions in the world then viola, we have the map!

- The formulation of Q-score is given below.

- Here, \(Q(s_{t},a_{t})\) is the Q-score for taking action \(a_t\) in state \(s_t\). \(r_t\) is the reward we get to perform the action. \(\gamma\) is the discount factor which is multiplied with the current reward estimation for the best possible action from the next step \(s_{t+1}\). The discount factor (range 0 to \(\inf\)) is used to adjust the importance to be given to immediate reward (low \(\gamma\)) or future reward (high \(\gamma\)).

- The formulation to update the Q-score is given below, where \(\alpha\) is the learning rate (yes, like the Neural networks

)

- Below is an example of a 2D grid world, where we have set the middle cell with a reward of +1 and we perform multiple iterations of the q-learning.

Hint

If you want to create and play around with your 2D grid world, try this Interactive Q-Learning playgorund by yours truly. [1]

Code

Exploring MountainCar env

- Now let's get started with some action i.e. coding. We will train a RL agent using Q-learning to beat the game of MountainCar available on OpenAI's Gym package that provides a simple interface to represent and play with general RL problems.

- Before that, let's understand some fundamentals of the MountainCar game environment.

- Aim: To get the car to reach the yellow flag

- Observation (states):

- Position: -1.2 to 0.6

- Velocity: -0.07 to 0.07

- Actions:

- Push left: 0

- No push: 1

- Right push: 2

- Reward:

- Reached yellow flag: +1

- Other: -0.5

- Why is this difficult? Bcoz a normal continous right push will not work, to win we need to oscillate as we need momentum from left.

- We can explore the same via code as shown below,

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | |

Training Agent using Q-learning

Hint

Before starting with this section, why not try to code up some basic logic to beat the game? You could try to always push right or oscillate left and right at certain intervals. Give it try, it will be fun

- The code shared below (credits to gkhayes) trains a Q-learning agent to play the MountainCar game. It tries to create a Q-table (that contains Q-scores) for all the combination of states and actions. As the states are continous (they are in float), it is first discretized to make it a finite state problem. The final Q-table is of the shape

[19, 15, 3], with 19 possible states for position, 15 states for velocity and 3 actions.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 | |

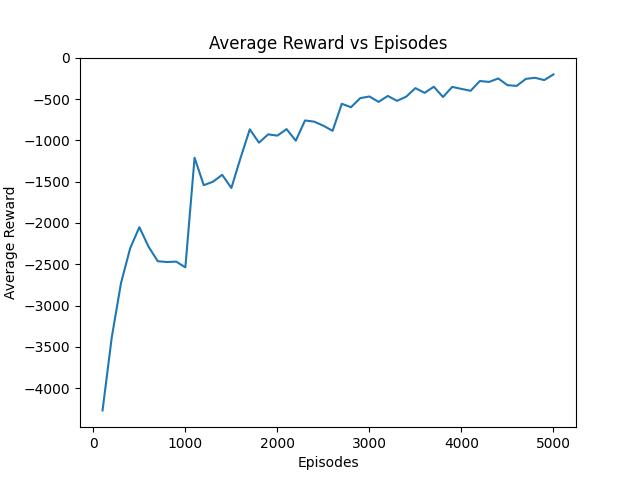

- Running the above code trains a RL agent for 5000 episodes and saves the Q-table as numpy array. It also computes the average reward vs episodes graph that is shown below. Do note how the agent is able to get more rewards over more training!

Inference of trained agent

- To run the trained model, we can use the saved Q-table to select the best action given any state in the game. Below is the code for the same,

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | |

- There you go!!