Compute and AI Services

- Gone are the days when we needed to buy high end devices to do literally anything. Currently there are plethora of services available online (and many of them are free!) that provide not only compute to use as you feel, but also generic AI services.

- Let's look into some of the famous and widely used compute and AI services.

CaaS: Compute as a Service

In this section we will cover some of the famous (and with some hint of free) platforms that provide compute-as-a-service (CaaS). These CaaS sometimes could be plain simple virtual machines, sometime they can be a cluster of nodes, while in other cases they can also be jupyter like coding environment. Let's go through some of the examples.

Google Colab

Introduction

- Colaboratory or "Colab" in short, is a browser based jupyter notebook environment that is available for free. It requires no installation and even provides access to free GPU and TPU.

- The main disadvantages of Colab is that you cannot run long-running jobs (limit to max 12 hrs), GPU is subject to availability and in case of consistent usage of Colab, it might take longer to get GPU access.

- Google provides Pro and Pro+ options which are paid subscriptions to Colab (10$ and 50$ per month, respectively). While it provides longer background execution time and better compute (among others), they do not guarantee GPU and TPU access all the time. Remember, Colab is not an alternative to a full-blown cloud computing environment. It's just a place to test out some ideas quickly.

Google Colab Snippets

Run tensorboard to visualize embeddings

- Taken from: how-to-use-tensorboard-embedding-projector

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Connect with Google Drive and access files

- This code will prompt you to provide authorization to access your Google Drive.

1 2 | |

Kaggle

- Apart from being famous for hosting big AI/ML competitions, the next cool thing about the site is that it also provides free GPU/TPU computes! All you have to do is to sign up, create a new notebook and then you can start using it - import their datasets or your own, and start training you AI models!

- All of this ofcourse has a limit, you get minimum 30 hours of GPU usage per week, and at max 20 hours of TPU per week. Another catch is that you can only use GPU/TPU for 9 hours continuously.

- That said, Kaggle notebooks are a great place to perform your personal experiments or participate in new competitons to enhance your expertise. For more official work (industry or academics), do remember that you are putting your dataset in 3rd party's hands.

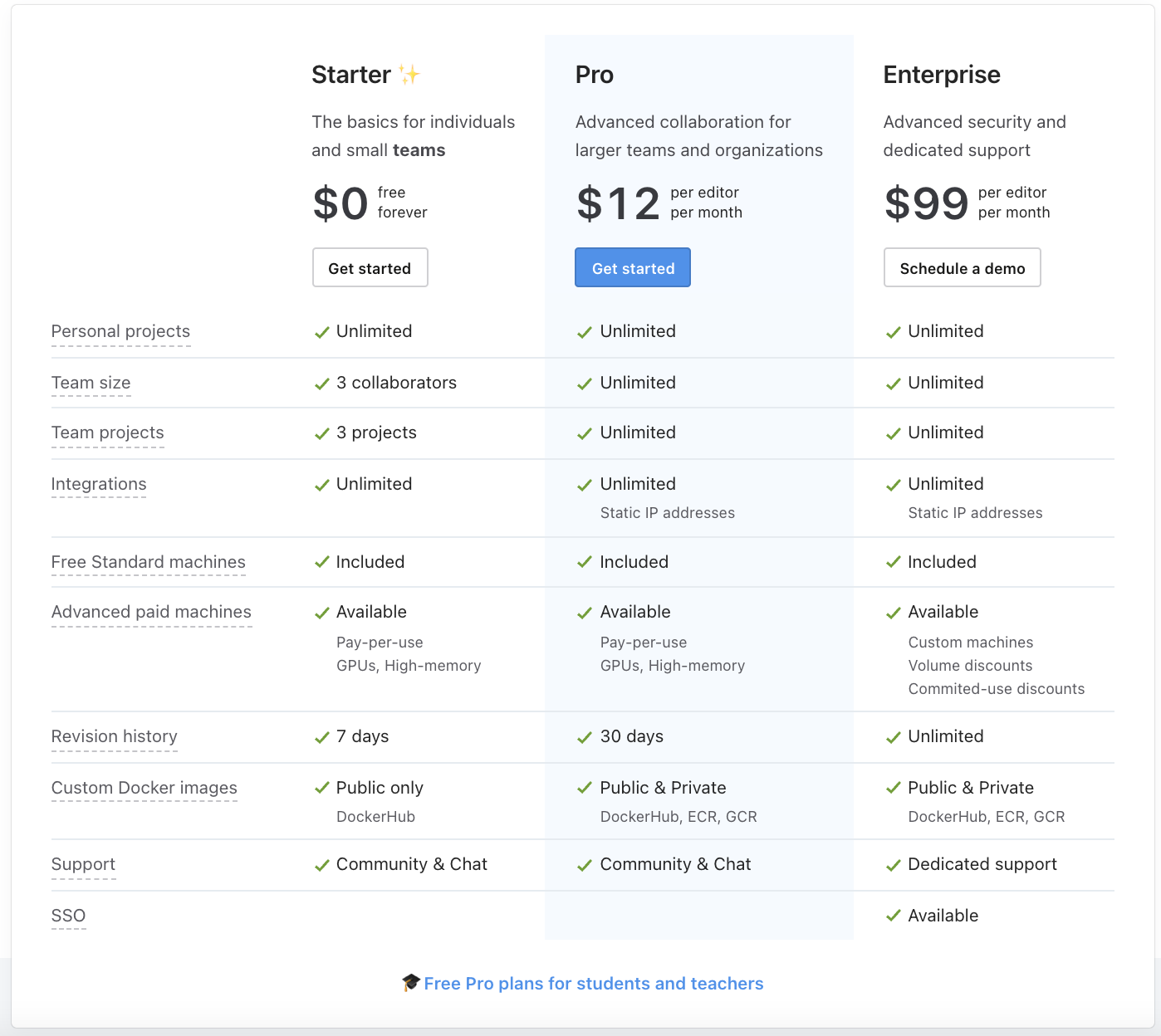

DeepNote

- DeepNote provides a highly customised jupyter like notebook. It's one of the richest service in terms of features. Here goes some examples - you can create projects with multiple notebooks, you can create teams and collaborate with your colleagues live, you can quickly visualize datasets from notebooks, you can schedule notebooks, you can host reports, and best of all - they have free tier

- There are multiple pricing based tiers. To begin with you can try out the free tier and get upto 750 hours of Standard compute hours per month, that's like keeping one project (that could consist of multiple notebooks) open for the complete month! (offer subject to change; was valid at the time of writing)

Hint

Planning to try DeepNote out? Use the refer link to get free 20 Pro compute hours (thats upto 16GB RAM and v4vCPU)

MLaaS: Machine Learning as a Service

In this section we will cover some of the famous platforms that provide Machine learning-as-a-Service (MLaaS). These MLaaS take care of infrastructure related aspect of data holding, data preparing, model training and model deployment. On top of this, they provide a repository of classical ML algorithms that can be leveraged to create data science solutions. The idea is to make data science as a plug and play solution creation activity, as they take care of most of the engineering aspect. Let's go through some of the examples.

AWS Sagemaker (Amazon)

- AWS Sagemaker is a cloud-based servies that helps data scientists with the complete lifecycle of data science project.

- They have specialised tools that cover following stages of data science projects,

- Prepare: It's the pre-processing step of the project. Some of the important services are "Gound Truth" that is used for data labeling/annotation and "Feature Store" that is used to provide consistence data transformation across teams and services like training and deployment.

- Build: It's where an Data Scientists spends most of his time coding. "Studio Notebooks" provides jupyter notebooks that can be used to perform quick ideation check and build the model.

- Train & Tune: It's where you can efficiently train and debug your models. "Automatic Model Training" can be used for hyper-parameter tuning of the model i.e. finding the best parameters that provides highest accuracy. "Experiments" can be used to run and track multiple experiments, its an absolute must if your projects requires multiple runs to find the best architecture or parameters.

- Deploy & Manage: The final stage, where you deploy your model for the rest of the world to use. "One-Click Deployment" can be used to efficiently deploy your model to the cloud. "Model Monitor" can be used to manage your model, like deleting, updating, and so on.

- AWS charges a premium for providing all of these features under a single umbrella. For a more detailed pricing information, you can estimate the cost using this.

Hint

As AWS Sagemaker is a costly affair, several DS teams try to find workarounds. Some of them are like using spot instances for training as they are cheaper & using AWS Lambda for deploying small models.

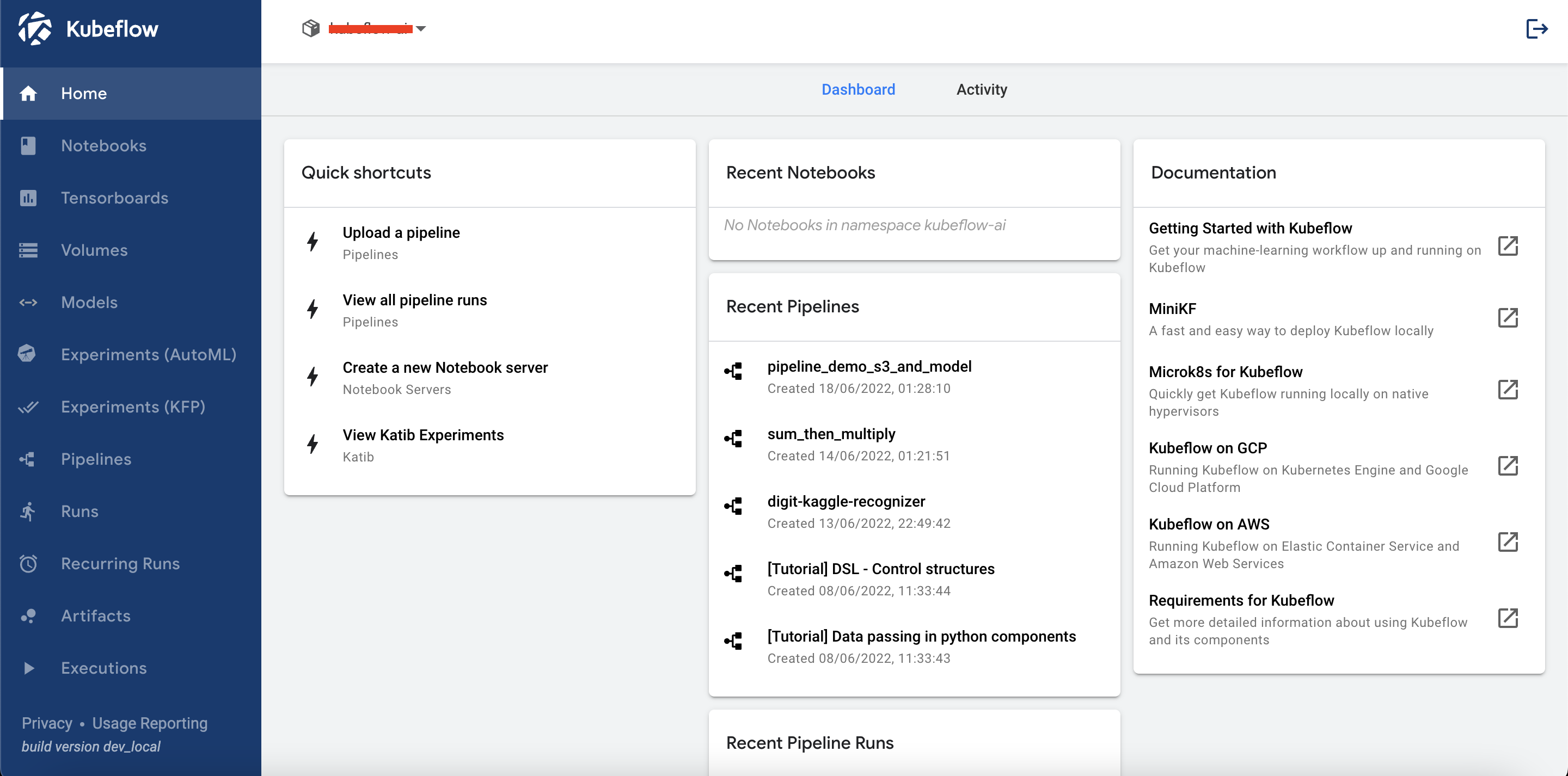

Kubeflow

Introduction

- Kubeflow is an open-source project that is dedicated to making development, tracking and deployments of machine learning (ML) workflows on Kubernetes simple, portable and scalable. As per their website, "Anywhere you are running Kubernetes, you should be able to run Kubeflow."

- While there are many paid MLaaS like Sagemaker, Azure ML Services and Google AI Platform, Kubeflow is an outlier that provides most of the features present in the paid platforms, but for free!

- We can deploy Kubeflow on Kubernetes by following the guide on their website. Once done, you can boot it up and it should look as shown below,

Hint

Go with Kubeflow if you are setting up a new AI team for your organziation or school, and don't want to commit to costly services like Sagemaker. But beware, it does require DevOps knowledge, as you will need to setup Kubernetes and manage it. While it is completly free, you will be charged for the compute you utilize. To cut down the cost, in case you are connecting Kubeflow with AWS, you can use Spot instances.

Components

- Kubeflow provides several individual components that will help with the ML lifecycle. Note, we can even pick and choose the components you want while installation. Some of them are,

- Notebook: here we can create jupyter notebook servers and perform quick experimentations. Each server is assigned its own volume (hard memory). On booting up a server, a new compute is procured and you will see Jupyter Lab page where you can create mulitple notebooks, scripts or terminals. The compute could be EC2 instance or Spot instance, incase of AWS connection and based on your configuration.

- Pipeline: here we define one ML project. Kubeflow supports defining a pipeline in terms of a DAG (Directed Acyclic Graph), where each individual function or module is one node. Pipeline represents a graph of modules, where execution happens in a sequential or parallel manner while considering the inter-module dependencies , ex:

module_2requires output ofmodule_1. While this leads to modularization of the code, the real intention is to make the pipeline execution traceable and independent from each other. This is achieved by containerizing each module and running them on different instances, making the process truly independent. - Experiments: On a single ML project, we may want to run multiple experiments, ex: (1)

test_accuracyto try out a couple of parameters and compare accuracy, (2)test_performanceto compare latency on different shape and size of data. This is where you define individual experiments. - Runs: One execution of an experiment for a pipeline is captured here, ex: for

test_accuracyexperiment of MNIST pipeline, perform one run withlearning_rate = 0.001. - Experiments (AutoML): we cannot try all the parameters for the

test_accuracyone by one. The obvious question, why not automate it by doing hyperparameter tuning? AutoML is what you are looking for! - Models: after all experimentations and model training, we would like to host/deploy the model. It can done using this component.

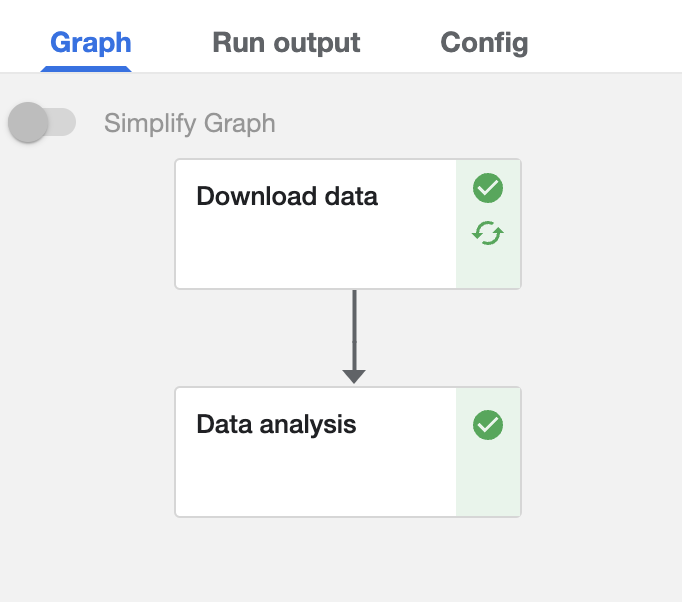

Creating and running Pipeline

- Let's start the coding

. So for this tutorial, we will create a simple Kubeflow pipeline with two steps,

- Step 1 - Download data: where we will download data from S3 bucket, pass the downloaded data to the next step for further analysis.

- Step 2 - Perform analysis: we will perform some rudimentary analysis on the data and log the metrics.

- We will try to go through some basic and advanced scenarios, so that you can refer the code to create your own pipeline, even if it is completely different. After creating the pipeline, we will register it, create an experiment and then execute a run.

- Lets start with importing the relevant packages. Make sure to install

kfpwith the latest version by usingpip install kfp --upgrade

1 2 3 4 5 6 | |

- Now we will create the first module that downloads data from S3 bucket. Note, Kubeflow takes care of the logistics of data availability between modules, but we need to share the path where data is downloaded. This is done by typecasing parameter with

OutputPath(str)as done online 2. The process will be similar for ML models as well. We can download a model in the first module and perform training in another, and perform performance check in the third module.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | |

- From

line 40toline 43, we are converting the function to Kubeflow pipeline component. As the component will run on an independent instance, we need to provide thebase_imageandpackages_to_installinformation as well.

- Next, we will create the second module that loads the data from first module and just returns some dummy metrics. In reality, you can do a lot of things like data preprocessing or data transformation or EDA. For now, we will just stick with a dummy example.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | |

- In the function we are defining the

data_pathasInputPath(str)and is later used directly online 14, without the need of manually sharing the data across instances.

- We define

mlpipeline_metricsas output (by type casing) as this is mandatory if you want to log metrics. This is done online 21toline 29, where we log dummyaccuracyandf1metrics. Next we return the metrics. Finally, we also create Kubeflow component.

- Next, we will combine all of the components together to create the pipeline.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |

- We start with importing relevant modules and creating the pipeline function where we define the name and description of the pipeline. Next we connect the components together.

- From

line 20toline 30, we are defining and setting the node wide affinity so that we only use spot instances for the computation. This will keep our cost to the minimum. - Finally we create a Kubeflow client and compile the complete pipeline. This will create a zip file of the compiled pipeline that we can upload from the pipeline tab in Kubeflow.

- Next, we can create an experiment and the perform a run from the respective Kubeflow tabs. The process is quite simple and can be easily done from the UI. Once we have executed a run and the process is completed, we can see the individual modules and the status in the run page as shown below.

my_pipeline pipeline

- And we have done it